Program Announcement

On behalf of the Chairs and Program Committee of DigiPro 2017, we are honored to announce this year’s conference program. As in years past, we had the welcome challenge of selecting from a deep and competitive collection of submissions, and we’re extremely excited about what we’ve got in store.

Time Table

08:00 – 08:45 AM Registration and Coffee/Pastries

08:45 – 09:00 AM Introduction

09:00 – 10:15 AM Session #1 – Pipeline

10:15 – 10:30 AM morning break

10:30 – 11:20 AM Session #2 – Real-Time

11:20 – 11:30 AM break (this will be un-catered)

11:30 – 12:30 PM Keynote

12:30 – 02:00 PM Lunch

02:00 – 03:15 PM Session #3 – Characters

03:15 – 03:30 PM afternoon break

03:30 – 04:45 PM Session #4 – Case Studies

04:45 – 05:15 PM Conclusion

05:00 – 07:00 PM Reception

Session #1 Pipeline

Pipeline X: A Feature Animation Pipeline on Microservices

– Dan Golembeski, Ray Forziati, Ben George, Doug Sherman (DreamWorks Animation)

Here we present a unique approach to building a highly-scalable, multi-functional, and production-friendly feature animation pipeline on a core infrastructure comprised of microservices. We discuss basic service layer design as well as the benefits and challenges of moving decades-old production processes for an entire animation studio to a new, transactional pipeline operating against a compartmentalized technology stack. The goal is to clean up the clutter of a legacy pipeline and enable a more flexible production environment using modern, web-based technology.

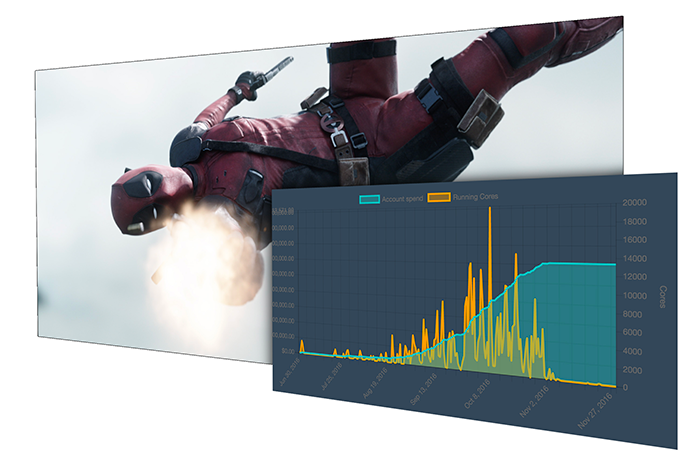

Cost Efficiency In The Cloud: Getting The Most Bang For Your Buck!

– Kevin Baillie (Atomic Fiction) and Monique Bradshaw (Conductor Technologies)

Though it offers immense and undeniable compute scalability, cloud computing has a reputation for being more expensive than the local alternatives. But does it have to be? We’ll use data from visual effect company Atomic Fiction to look at cost optimization strategies such as using preemptible instances, cost limits, vertical vs horizontal scaling, and selection of instance types, to provide examples of how those strategies can reduce production costs.

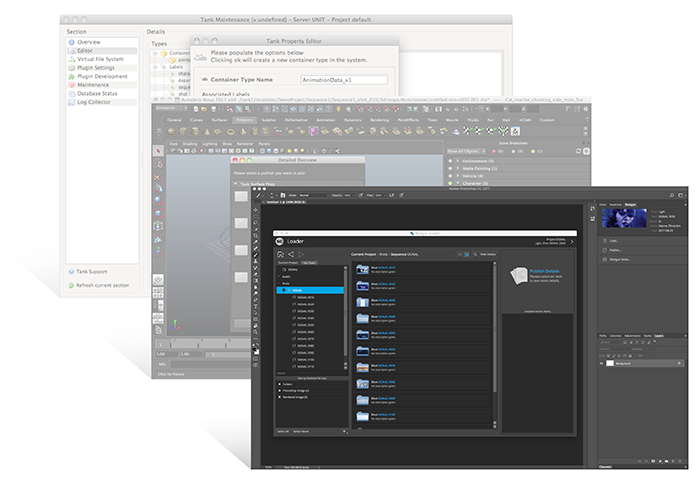

The Shotgun Pipeline Toolkit: Productizing and Democratizing Production Pipelines

– Josh Tomlinson, Manne Öhrström and Don Parker (Shotgun Software)

This talk presents the motivations and goals for developing the Shotgun Pipeline Toolkit (Toolkit), a platform for building, customizing, and evolving production pipelines. We cover the challenges of developing a product that is valuable for studios of all types and sizes, supports common operating systems, and is easy to use; all while providing the flexibility and customizability required by creative studios. We will show the evolution of Toolkit from concept to release and the lessons learned trying to build pipeline components traditionally found only in studios with large development budgets. Finally, we look at recent work to reduce the barrier to entry to Toolkit as a step towards democratizing pipeline.

Session #2 Real-Time

Emotion Challenge: Building a New Photoreal Facial Pipeline for Games

– Alex Smith, Sven Pohle, Wan-Chun Ma, Chongyang Ma, Xian-Chun Wu, Yanbing Chen, Etienne Danvoye, Jorge Jimenez, Sanjit Patel, Mike Sanders and Cyrus Wilson

In recent years the expected standard for facial animation and character performance in AAA video games has dramatically increased. The use of photogrammetric capture techniques for actor-likeness acquisition, coupled with video-based facial capture and solving methods, has improved quality across the industry. However, due to variability across project pipelines, increased per-project scope for performance capture, and a reliance on external vendors, it is often challenging to maintain visual consistency from project to project, and even from character to character within a single project. Given these factors, we identified the need for a unified, robust, and scalable pipeline for actor likeness acquisition, character art, performance capture, and character animation.

Fortnite: Supercharging CG Animation Pipelines with Game Engine Technology

– Brian Pohl, Andrew Harris, Michael Balog, Michael Clausen, Gavin Moran and Mark Donald (Epic Games)

Game engine technology, when applied to traditional linear animation production pipelines, can positively alter the dynamics of animated content creation. With realtime interactivity, the iterative revision process improves, flexibility during scene assembly increases, and rendering overhead is potentially eliminated.

Keynote

Real-Time Technology and the Future of Production

– Kim Libreri, CTO, Epic Games

Kim Libreri has pushed the limits of technology and visual artistry his entire career. As CTO of Epic Games, he is pushing the Unreal Engine beyond games, enabling new forms of interactive media and entertainment, virtual reality, visualization, and virtual production. Filmmakers now produce content that is more than just pixels. And real-time technology is more than an entertainment medium, it is changing the entire entertainment production process.

In this interview and discussion, Kim will talk about the future of visual content creation. How is real-time technology changing the way audiences experience entertainment? How is it changing the way films and visual entertainment are created? What will the content creation tools and production pipelines of the future be like? How do we build tools to empower the next generation of artists?

Session #3 Characters

Capturing a Face

– David Corral, Darren Hendler, Steve Preeg, Carolyn Wong, Ron Miller, Rishabh Battulwar and Lucio Moser (Digital Domain)

Actor-driven facial performance retargeting is the process in which a digital character’s facial performance is directly animated by an actor’s performance. In this process we match the actor’s facial performance to a character’s face as realistically and plausibly as possible, capturing all of actor’s subtle nuances. Character facial animation is generally created using a blendshape rig [Lewis et al. 2014] from FACS shapes [Ekman and Friesen 1978]. Combining these shapes linearly and non-linearly is an effective way of generating high quality facial performances. To translate the actor’s facial performance onto a digital character, the character’s facial rig is manipulated by animators, frame by frame, finding the combination of blendshape weights and direct manipulation controls that best match the actor’s performance. This process is very laborious and requires a significant amount of animation and modeling time to achieve realistic results. We present a process, Direct Drive, for facial animation which is greatly sped up by other geometric deformation and shape space correction techniques to provide an impeccable performance transfer, which subsequently utilizes the benefits of using a blendshape rig for animators hand-direct performances.

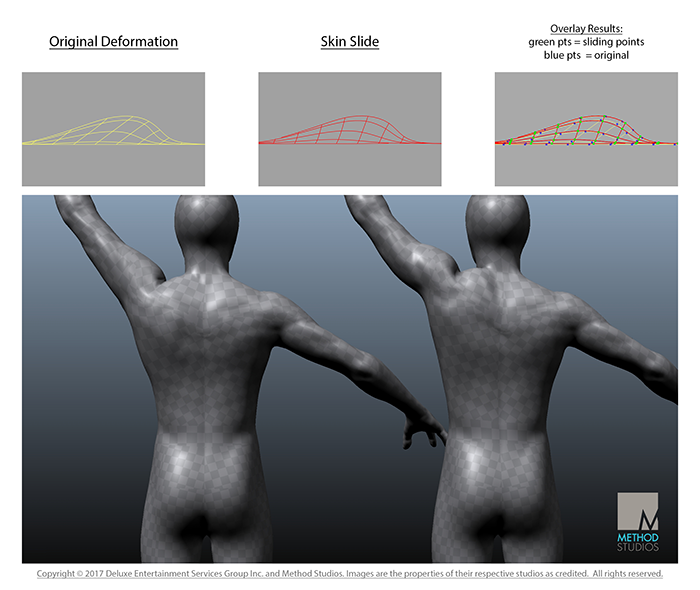

Efficient and Robust Skin Slide Simulation

– Jun Saito and Simon Yuen (Method Studios)

Skin slide is the deformation effect where the outer surface moves along its tangent directions, caused by the stretching of other skin regions and/or the dynamic motion of the underlying tissues. Such an effect is essential for expressing natural deformations of humans, animals, and tightly-fitting costumes. Previous methods to achieve skin sliding were either manually controlled, or laborious to set up and expensive to compute. We present a novel, automated method to achieve convincing skin sliding with minimal set-up and run-time computation. Our method takes advantage of the recent developments in the elastic body simulations formulated as optimization using Alternating Direction Method of Multipliers (ADMM). This approach, which generalizes position-based and projective dynamics, allows intuitive integration of arbitrary constraints such as collision against the original deforming surface. The collision is accelerated and stabilized by taking advantage of the local nature of the sliding. To accelerate the convergence even further while respecting the artist-driven deformation, we propose a simple method to resume the simulation from the previous local parameterization. Various production results using a Maya deformer implementation of this technique prove its efficiency and competency.

Lessons from the Evolution of an Anatomical Facial Muscle Model

– Lana Lan, Matthew Cong and Ronald Fedkiw (Industrial Light & Magic)

Recently, Industrial Light & Magic has begun exploring facial muscle simulation as a means of augmenting our blendshape-based facial animation workflow in order to attain higher quality results. During this process, we discovered that a precise and accurate model of the underlying facial anatomy is key to obtaining high-quality facial simulation results that can be used for photorealistic hero characters.We present an overview of our workflow for developing such a model along with some of the key anatomical lessons that were essential to the process.

Session #4 Case Studies

The Fashionista Twins: Conjoined Hair in Trolls

– Brian Missey, Megha Davalath and Arunachalam Somasundaram (DreamWorks Animation)

This talk presents the techniques used to create the hair for ‘The Fashionista Twins’, Satin and Chenille, from the film Trolls. The conjoined twins are uniquely connected in a loop by their brightly colored hair. The seamless connection of their hair posed unique technical challenges in grooming, rigging, and the shot pipeline and it required a collaborative effort to bring their hair to life.

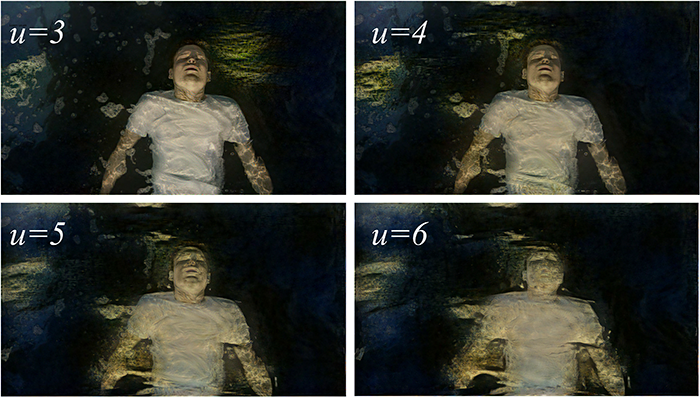

Bringing Impressionism to Life with Neural Style Transfer in Come Swim

– Bhautik Joshi (Adobe), Kristen Stewart and David Shapiro (Starlight Studios)

Neural Style Transfer is a striking, recently-developed technique that uses neural networks to artistically redraw an image in the style of a source style image. This paper explores the use of this technique in a production setting, applying Neural Style Transfer to redraw key scenes in Come Swim in the style of the impressionistic painting that inspired the film. We present a case study on how the technique can be driven within the framework of an iterative creative process to achieve a desired look and propose a mapping of the broad parameter space to a key set of creative controls. We hope this study can provide insights for others who wish to use the technique in a production setting and guide priorities for future research.

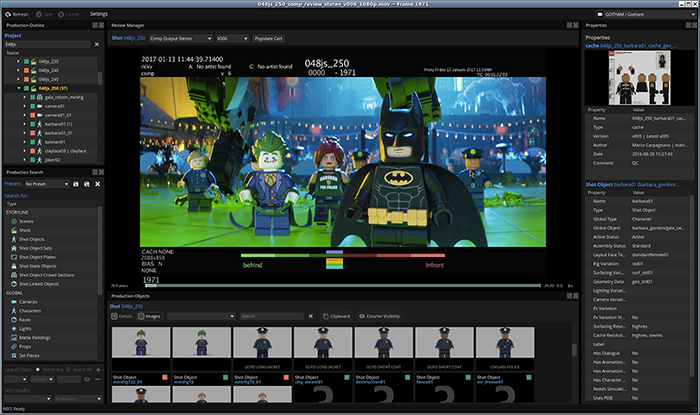

LEGO Batman: Graphical breakdown Editing – Optimising Assembly Workflow

– Oliver Dunn, Jeff Renton and Aidan Sarsfield (Animal Logic)

The ever increasing complexity of the LEGO movies demanded a new way of managing project breakdowns. Animal Logic’s negrained, modular representation for assets[Sarsed and Murphy 2011] meant that hundreds and thousands of shots, and shot objects, needed to be managed. It was clear from our experience on the e LEGO Movie that our existing text-based spreadsheet approach would not scale to demands of e LEGO Batman Movie.